The New Makers

How generative AI reshaped product building

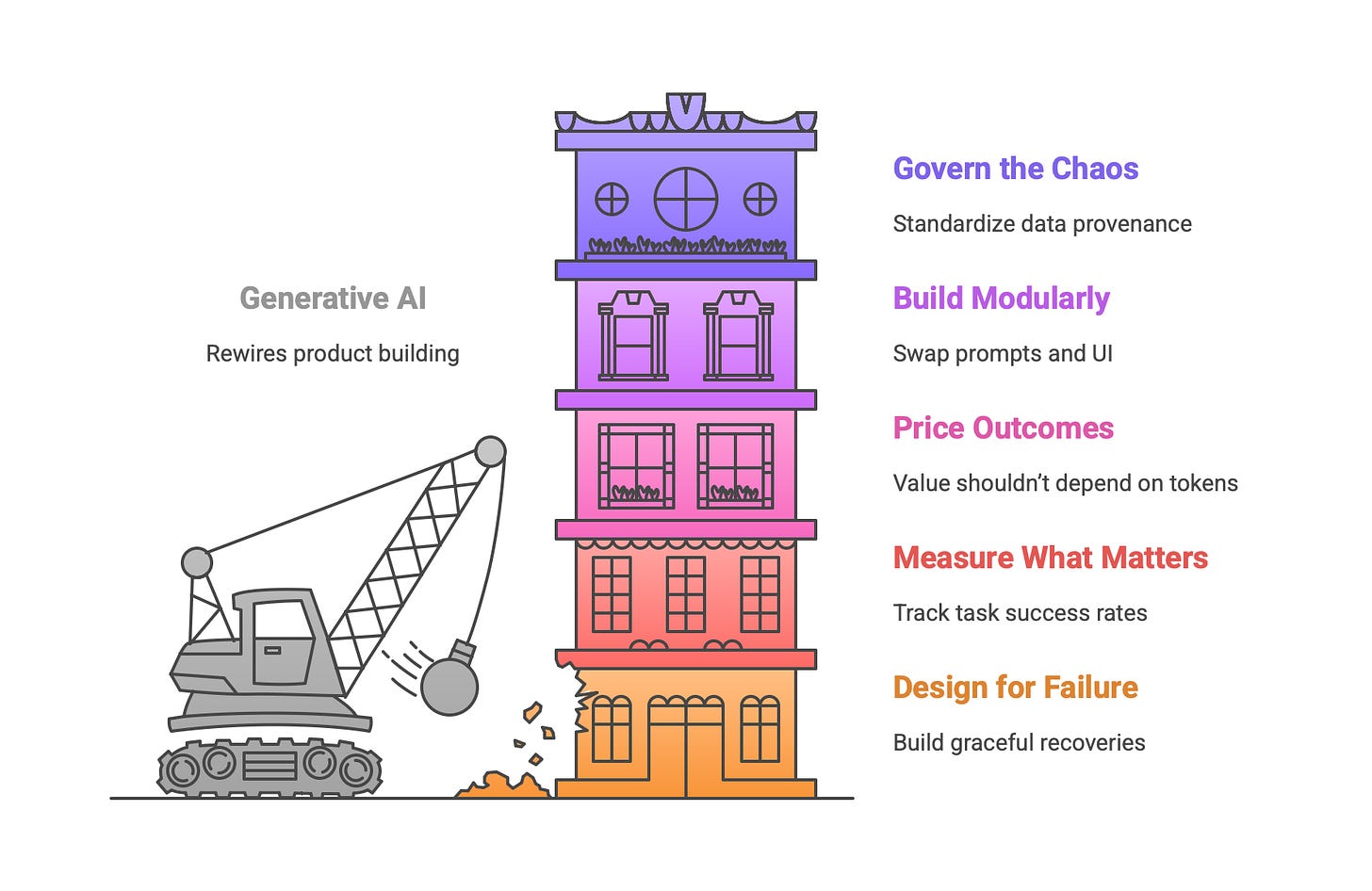

Generative AI didn’t just add features; it rewired product building.

By any honest account, the last few years in product have been a controlled detonation. Generative AI took a sledgehammer to our timelines, our teams, and our tolerance for ambiguity. If you build products, you now manage probabilities more than pixels. That’s not a fad. It’s a lasting realignment of how ideas become software and how software becomes value.

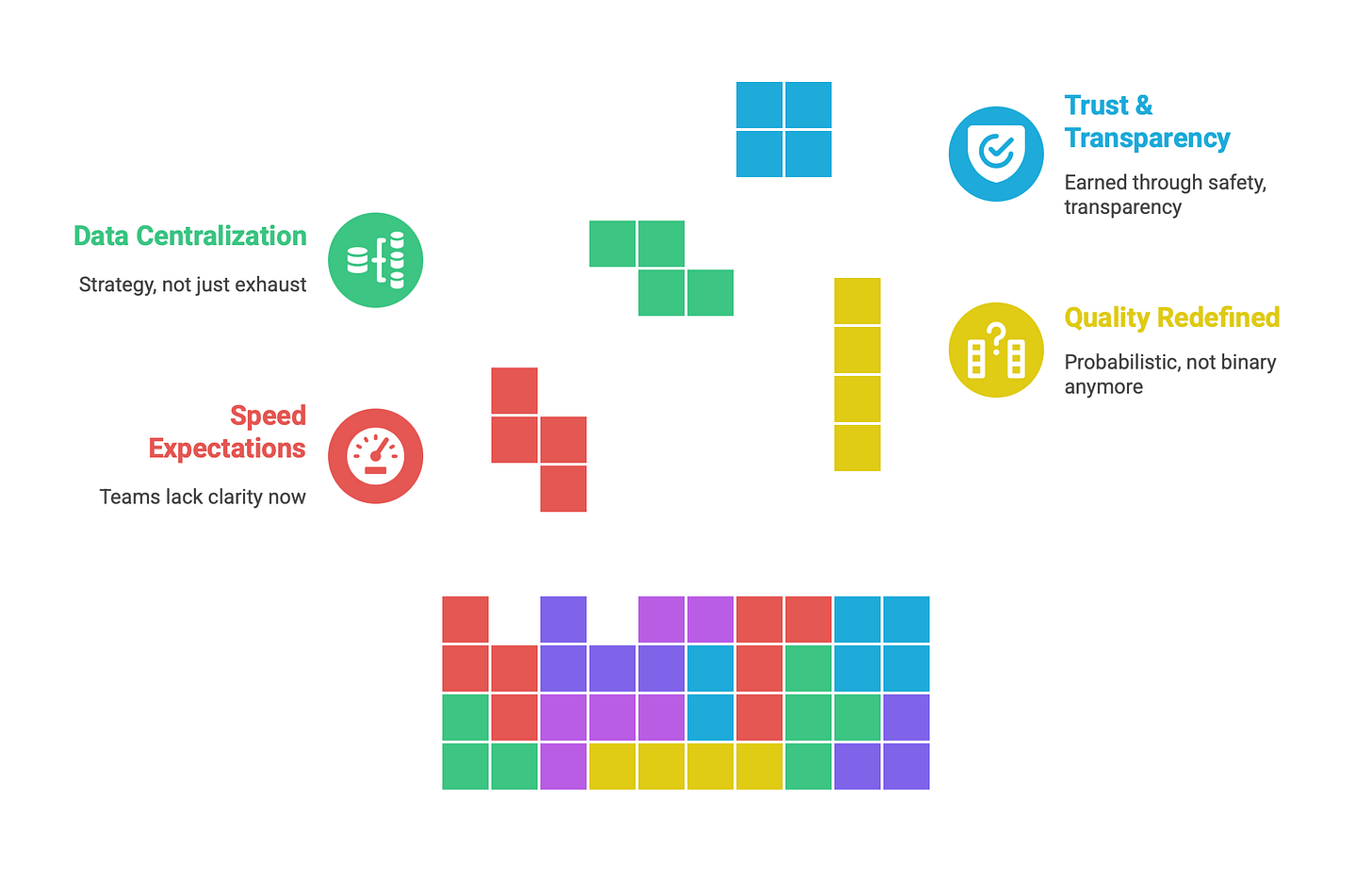

My thesis is simple: generative AI collapsed the distance between intent and implementation. The winners aren’t the ones who “added AI,” but the ones who rebuilt their product craft around four truths. Speed is now table stakes. Quality is probabilistic, not binary. Data is strategy, not exhaust. And trust, earned through transparency and safety, is the only moat that compounds.

What changed—fast

Start with speed. A prompt now stands where a sprint used to be. Prototypes emerge in hours rather than weeks: wireframes, code stubs, messaging, even synthetic user scenarios. Exploration got cheap. The cost of a bad idea fell toward zero, which means teams can pursue wider, parallel bets without betting the quarter. Good ideas get real sooner, and because they’re real, they get tested sooner. That acceleration is intoxicating, but it’s also unforgiving. When everything moves faster, teams that lack clarity don’t just stumble—they compound confusion at machine speed.

Products themselves have changed their posture. Interfaces learned to talk back. Copilots and assistants turned UIs into conversations where the canvas is tone, turn-taking, and recovery as much as layout. The empty state is no longer nothing; it’s what the model might say next. Designers who once obsessed over pixels find themselves scripting dialogue, refusals, and apologies. A graceful “I don’t know” has become a product feature, not a failure.

Requirements have become living conversations. Models draft PRDs, user stories, and acceptance criteria; humans adjudicate intent, constraints, and consequence. The product manager’s pen is still mighty, but the job shifts from authoring to curating and orchestrating. You’re no longer describing a static system so much as setting guardrails for a probabilistic one—defining what “good enough” means in context and what happens when the model is confidently wrong.

That redefinition extends to quality. Deterministic QA assumed fixed inputs would yield fixed outputs. Generative components don’t play by that rule. Teams now build evaluation harnesses, golden datasets, and confidence thresholds. “Works” is contextual: it means “works reliably enough for this use, with these safeguards, at this cost and latency.” If you don’t define “enough,” you’re not managing quality. You’re outsourcing it to chance.

Data, once an afterthought described as “exhaust,” is increasingly the engine. Retrieval pipelines, feedback loops, fine-tuning sets, and telemetry aren’t mere infrastructure; they are the product. The best teams version prompts and datasets the way they version code, treating them as first-class artefacts. Model choice has become a product decision laden with tradeoffs in cost, latency, privacy, and IP risk. If you can’t instrument it, you can’t ship it.

Who else changed

These shifts rewired roles. Product managers became system choreographers, making calls on model selection, latency budgets, token economics, and safety policies and owning the escalation paths when things go sideways. Designers widened the canvas to include dialogue, ambiguity, and repair. Engineers learned a new full stack: vectors, retrieval, tool use, caching, and evaluation. Data teams moved from collection to curation, where the craft is teaching through better examples rather than hoarding more of them.

What didn’t budge

For all that change, a few fundamentals didn’t budge. Problems still trump technology. “Because AI” is not a strategy; enduring products still start with painful, frequent, valuable problems. Clarity still compounds. Ambiguous goals paired with probabilistic systems is a recipe for chaos, so stating the user, the outcome, and the bar matters more than ever. And trust remains the moat. Privacy, attribution, and safety are table stakes. One hallucination in a critical workflow can unwind a year of growth. Show your work (citations, explanations, and fallbacks) or lose your user.

New rules of the road

New rules are emerging.

Design for failure first. Assume the model will be wrong sometimes and build graceful recoveries, citations, and human review into the experience where the stakes demand it.

Measure what matters. Task-level evaluations beat leaderboard scores; track effort saved, task success rates, and downstream outcomes, not just token counts.

Price outcomes, not tokens, because models swap and value shouldn’t. Build modularly so prompts, tools, policies, and UI can be swapped without tearing down the house.

And govern the chaos. Democratization without guardrails is debt, so standardize data provenance, prompt versioning, rollback procedures, and model-change monitoring.

The reckoning

There is a reckoning, too. This moment tempts teams into theatrics—AI flourishes that demo well and deliver little. It widens the gap between organizations that can evaluate and those that can only integrate. It introduces upstream risk: a provider tweaks a model, and your downstream UX drifts. Ship anyway, but pin versions, watch behavior, and budget re-evaluation as a feature, not an afterthought. Responsible AI isn’t a compliance box; it’s part of your brand promise.

So here’s the stance for builders. If your product isn’t measurably better because of AI, it’s decoration. Cut it for the better. If your system can’t explain itself, it won’t earn trust. Fix it now. If your team can’t swap models without a rewrite, you’ve built a trap. Re-architect it. And if your organization isn’t learning to evaluate and not just adopt, you’re already behind. So train it.

What’s next

What comes next is less about features and more about fabric. We’re moving from AI sprinkled into apps to AI woven through workflows and soon across organizations. On-device models will unlock private, low-latency experiences that were off-limits. Multimodal systems will blur the line between interface and assistant until “using software” feels more like collaborating with a partner.

The closer is both unfashionable and true: generative AI isn’t a shortcut around product discipline. It’s a force multiplier for teams that have it and a spotlight on teams that don’t. The job description didn’t change: understand people, deliver value, earn trust. The way we get there did. The new makers will pair machine speed with human judgment and build products that feel less like software and more like help.

In the news…

Andrew Ng says product management is the real bottleneck in AI.

Here are my thoughts on this.

Let us know what you think.